Who knew the irreverent show South Park could predict the future? But with the debut of the Generative AI program ChatGPT we appear to have reached the “AWESOM-O” age of artificial intelligence - and along with it, AI-fueled fraud.

Did ‘South Park’ Predict ChatGPT?

In one episode of the animated show, the ever-obnoxious Eric Cartman pranks a hapless victim by wearing a cardboard box costume and pretending to be a robot named AWESOM-O 4000. The prank backfires, forcing Cartman to keep wearing the robot costume much longer than he expected.

Eventually, he winds up at a Hollywood studio where executives and writers (who are oblivious to the fact that they are talking to a kid in a cardboard box) hungrily ask “AWESOM-O” for movie ideas. Cartman, still disguised as a robot, ends up pitching roughly 2,000 ridiculous movie plots – including about 800 Adam Sandler movies. (Is that where the idea for The Ridiculous Six originated?)

Fast forward almost 20 years later (Good grief! Has it been that long?) and South Park’s AWESOM-O has proven to be quite the oracle. The “real-life” AWESOM-O, ChatGPT, is capable of creating original content rapidly. But one thing the show didn’t predict was how the tool could be abused for fraud.

What is ChatGPT?

The artificial intelligence research organization OpenAI released ChatGPT in November 2022. It is described as a free search tool or chatbot capable of generating human-readable content in seconds. All a user has to do is enter a question and the program churns out a response that could easily be mistaken for copy written by a human being.

ChatGPT can write poetry, songs, and essays – using completely original phrasing. Capabilities like these alone make ChatGPT the stuff of English professors’ nightmares. Unfortunately, ChatGPT’s potential is more than a headache in the halls of academia. It also poses a significant problem for financial institutions and banks as fraudsters tap into the fraud capabilities of generative AI.

Financial institutions need to understand how Generative AI works to protect themselves and their customers from this type of fraud. Let’s dive into how the program operates and the different types of fraud it could unleash.

What is Generative AI?

If you look under the hood of AWESOM-O, you’ll find a mean kid in a cardboard box costume. Generative AI, naturally, is more complicated.

The term “Generative AI” is a type of artificial intelligence (AI) that can create new, previously unseen content. Large volumes of data. After the training period wraps up, the program can generate content based on the different patterns and features it learned.

Generative AI falls into two categories: Generative Adversarial Networks and Variational Autoencoders.

Generative Adversarial Networks (GANs): GANs are made up of two neural networks, a generator, and a discriminator. Both networks are designed to compete against each other. The generator network works by creating new samples. Meanwhile, the discriminator tries to distinguish its counterpart’s generated samples and real samples.

Variational Autoencoders (VAEs): VAEs are built on two core components: an encoder and a decoder. The encoder network learns a compact representation of input data. The encoder learns from a representation of the input dataset and feeds it back into the program. From there, the decoder generates new samples from this representation.

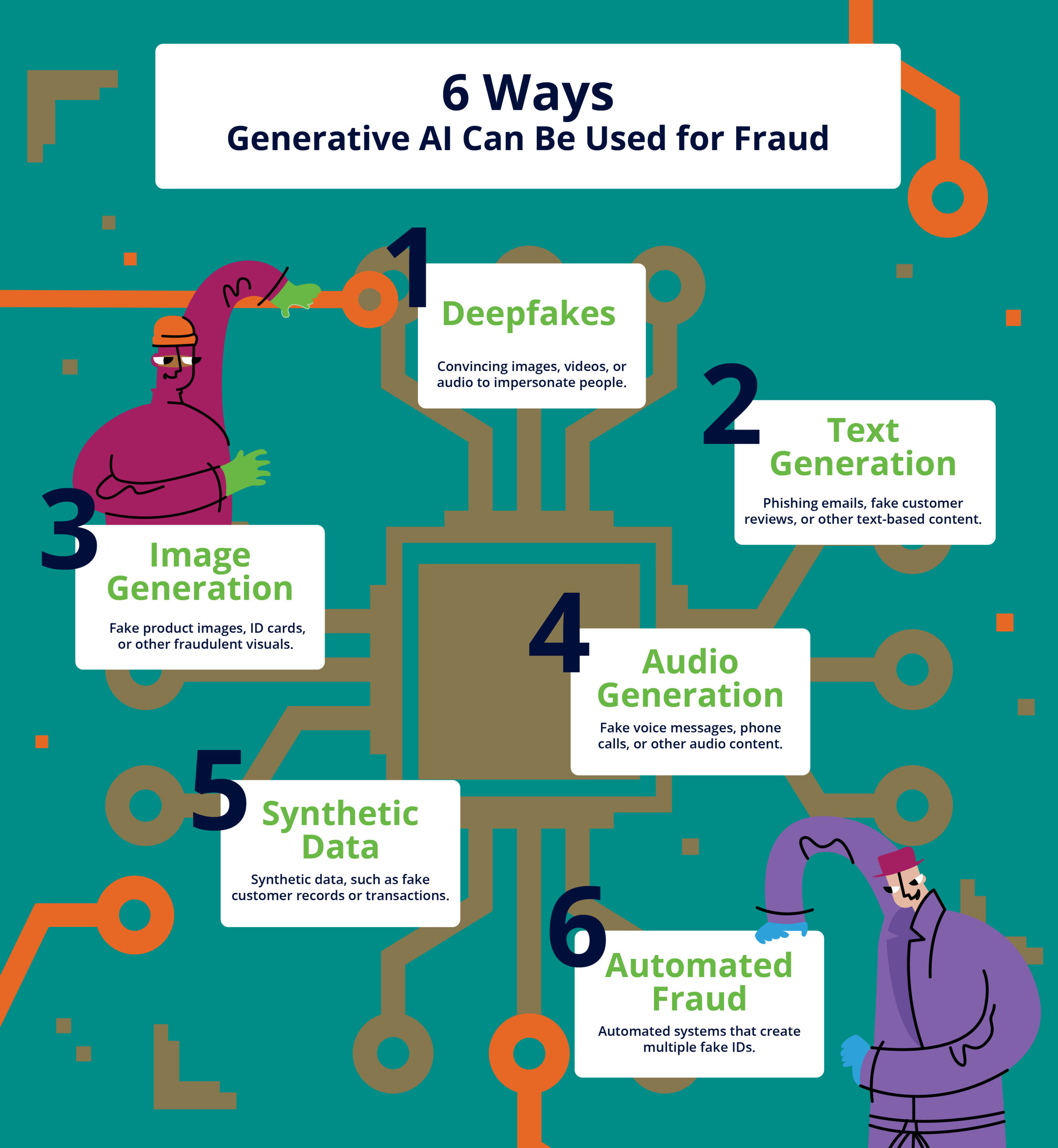

6 Types of Generative AI Fraud Threats to Watch

When asked how Generative AI can be used for fraud, ChatGPT was quick to provide a list. Here are some of the malicious tools that just became more widely available to malicious actors.

- Deepfakes: Fraudsters can use deepfake technology to create convincing images, videos, or audio of real people or events to impersonate individuals.

- Text Generation: A text generator can create phishing emails, fake customer reviews, or other fake text-based content.

- Image Generation: Fraudsters may use image generation AI models to create fake product images, fake ID cards, or other fraudulent visuals.

- Audio Generation: Generative AI can create fake voice messages, fake phone calls, or other fraudulent audio content. One researcher used the technology to fake his own voice and demand a refund from his bank.

- Synthetic Data: Generative AI can create synthetic data, such as fake customer records or fake transactions, that can be used to commit fraud or evade detection.

- Automated Fraud: Fraudsters may use Generative AI to create automated systems that can commit fraud at scale using social engineering. For example, it can create multiple fake identities or accounts to make unauthorized transactions.

These are just a few examples of how ChatGPT’s fraud capabilities. Unfortunately, it’s not the final list. There are already reports that hackers are seeking ways to bypass ChatGPT’s built-in restrictions. In another underground hacking forum, users discussed how to pay for the program’s services using stolen credit cards.

Sergey Shykevich, threat intelligence group manager at Check Point Software Technologies, recently said Russian hackers are particularly interested in ChatGPT’s use cases to make fraud more “cost-effective.” Worse yet, like the Hollywood writers turning to Cartman’s AWESOM-O for ideas, it seems cybercriminals are looking to ChatGPT for ways to “democratize” cybercrime.

If that’s not a bad omen for banks, I’m not sure what is.

How Can Banks Prevent Generative AI-based Fraud

These may include:

- Anomaly detection: Banks can use machine learning algorithms to detect unusual patterns or transactions that may indicate fraud.

- Behavioral biometrics: Banks can analyze users’ typing patterns, mouse movements, and other behavioral data to identify potentially fraudulent activity.

- Data visualization: Banks can use data visualization tools to identify patterns and anomalies in large sets of data, which can help them detect potential fraud.

- Human review: Banks can also have trained human analysts to review transactions and other data to identify potential fraud.

- Regular updates of the AI models: Banks can use regular updates of AI models to detect any new type of fraud.

It is also important for banks to have robust internal controls and compliance systems in place to detect and prevent fraud involving generative AI.

Banks should always be continuously monitoring and updating their anti-fraud measures to stay ahead of the ever-evolving fraud landscape. The threats from Generative AI are no exception. It’s highly likely that new methods of fraud using Generative AI will emerge over time. Therefore, it’s important for financial institutions must stay up-to-date on the latest trends and advancements in Generative AI and to continuously monitor and update their anti-fraud measures.

Generative AI like ChatGPT is here to stay. But we’re only just starting to scratch the surface of its potential. Unfortunately, criminals and fraudsters have proven time and time again to have powerful imaginations when it comes to making new advancements work in their favor. The headaches teachers and professors will face in stopping plagiarism is nothing compared to the Generative AI-driven fraud wave we’re very likely to face in the near future. And unfortunately, it’s too late to ask AWESOM-O – the OG ChatGPT – for help.

Share this article:

Related Posts

0 Comments5 Minutes

A Global Elder Fraud Epidemic: Exclusive Feedzai Research

Digital connections have become lifelines for our senior citizens. But the digital realm,…

0 Comments10 Minutes

A New Account Fraud Solution to Block Fraud at Stage 1

The account opening stage is arguably the most crucial point in a bank's customer…

0 Comments16 Minutes

Using Fraud Analytics to Stay Ahead of Criminals

As if the battle against fraud wasn’t complicated enough, merchants have to contend with…