Deep learning (DL), a subset of machine learning, revolutionized several technologies in the last decade, ranging from computer vision to natural language and speech processing. While deep learning typically utilizes unstructured data (e.g., images, natural language, or audio), here at Feedzai, we mostly work with tabular data — tables with columns listing various attributes (e.g., amount, merchant, or hour of the day). With tabular data, each line labels individual transactions as “fraud” or “not fraud.”

It’s less popular to use deep learning for tabular data than for unstructured data, but that’s changing as the DL community rapidly evolves. Numerous scientific papers on deep learning have been published, and some of the biggest tech companies have dedicated ample resources to research this subset of machine learning.

Our culture of continuous innovation got us wondering – could Feedzai use deep learning to improve our fraud detection models?

We’ve detailed our exploration into deep learning below. Let the adventure begin!

The typical transactional case: tabular data

Let’s dive into how Feedzai’s fraud detection system functions sans deep learning.

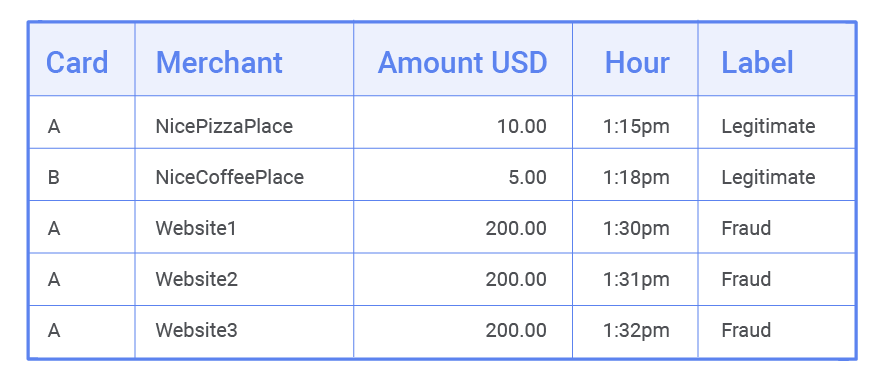

Imagine we use the following (dummy) dataset to train a model to classify transactions as fraud or not fraud:

Let’s say the last three transactions have been labeled as fraud. Based on provided attributes (three $200 transactions with the same card on three different websites in three minutes), they do seem suspicious. However, if we feed the dataset to a classifier, then each transaction is looked at independently.

A model trained on this dummy dataset would fail to learn the reason why the activity is suspicious (the same card was used for three payments on different websites over a short time). Instead, it would probably incorrectly flag these transactions as fraudulent because it thinks $200 is a particularly risky amount, which isn’t likely the case.

Usually, our fraud detection system troubleshoots this by enriching each transaction, or row in the table, with profiles(new columns that convey past information about the entities involved in the transaction). Depending on the use case, these entities are not limited to just credit cards, but can also include merchants, emails, or device IDs — any field in which history over time may help us detect fraud. These profiles typically involve aggregations over sliding windows of X minutes, days, or even months. Some examples include:

- the number of transactions or total amount spent by a particular credit card over the last X hours or days;

- the number of distinct emails corresponding with a shipping address in online transactions over the last X days or weeks;

- the ratio of the current transaction amount against the credit card’s mean amount per transaction over the last X months.

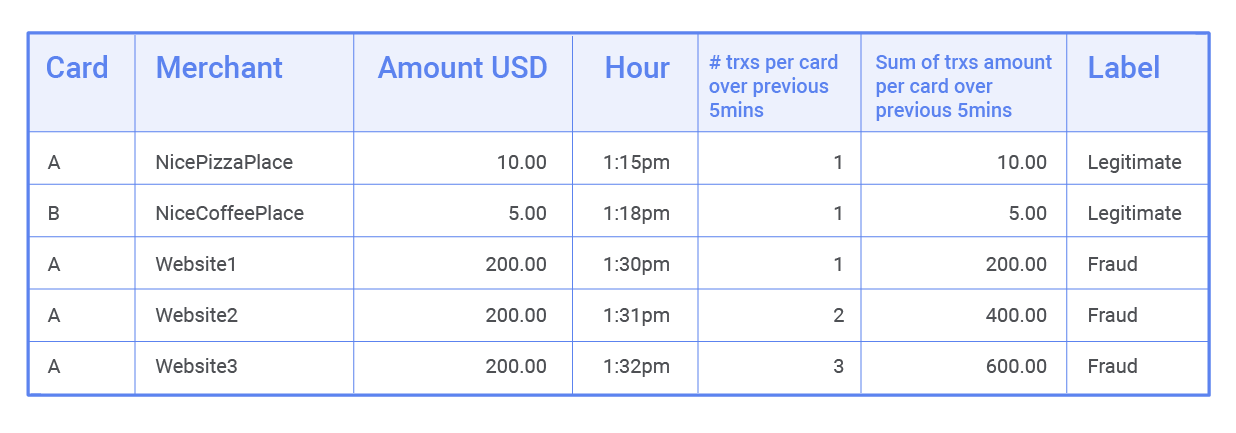

In our dummy example, computing the count of transactions and the sum of transactions per card over 5-minute windows would look like this:

After adding profiles, the enriched dataset looks like this:

Our aim is for these new columns to convey useful information about the entities’ histories. If we train a Random Forest on this enriched dataset, each row is still considered independently. However, it now contains past information, which enables the model to detect fraudulent transaction sequences.

The downside of profiles

Profiles work immensely well in most use cases and frequently boost our detection results. However, they still have two downsides:

- even with Feedzai’s AutoML, it takes time to compute hundreds of profiles before training a model; and

- in production, maintaining and updating profiles and the related state consumes a ton of memory.

Deep learning would allow us to go in a different direction. Instead of reacting to issues when we try to enrich transactions with profiles, we can proactively find solutions that forgo explicit profile computations. Could we achieve the same or even better results with the help of deep learning?

How recurrent neural networks (RNNs) work

RNNs, neural networks that work on sequences of inputs, contrast with classifiers such as Random Forests or feedforward neural networks, which look at individual inputs independently. RNNs have revolutionized natural language processing (where we typically deal with sequences of words), speech recognition (sequences of short sound clips), video classification (sequences of frames), and other areas.

It’s possible to apply RNNs to the problem we’re trying to solve. If we know that the history of an entity over time is important, we can move away from classifying a transaction independently. Instead, we can classify the sequence of transactions that led to the one we want to classify. To achieve this, we encode knowledge of a card’s history up to the current point in time in a state vector, and then use that state to predict fraud. During training, we want to make the model learn how to:

- classify transactions based on the state; and

- update the state at each step of the sequence to make it capture relevant information.

There are several variants of RNNs, including LSTMs and GRUs. We’ve mostly been using GRUs; they are slightly easier to train, and the results of both variants are roughly the same. The figure below shows what happens when we train the model with historical data.

- We start with a state vector initialized to zero for each card.

- Then we take a sequence of a card’s transactions and feed it through the RNN. For each transaction, we predict if it’s fraudulent or legitimate and update the state that is passed on to the next transaction.

- We do this with a batch of cards.

- After we make predictions for all transactions of the batch’s cards, we compare the predictions with the labels. We compute the loss for each transaction — a measurement of how much our predictions miss the correct labels by — and adjust the model through Stochastic Gradient Descent.

- Rinse and repeat. We keep doing this with different batches of cards and watch the model improve over time.

Each card is a different sequence with its own evolving state vector. However, the learnable blocks in the model (the blocks we gradually adjust to minimize the average error during the training process) are shared across sequences and steps within each sequence:

- the blue “GRU cell” block — this block produces a new state vector for a card, given its previous state vector and an incoming transaction;

- the orange “classifier” block — this block produces a prediction based on the state vector and a transaction.

We skipped most of the details, but each of these two blocks is a neural network. Sounds complicated to implement? It isn’t anymore. With tools such as TensorFlow, Keras, and PyTorch, it’s easy. Most of these frameworks offer implementations for several RNN variants.

Can we use this in a real-time setting?

It may look like this is impossible to use in a real-time setting, considering latency and memory constraints. After all, to get a prediction for the 100th transaction of a card, we would need to feed the model the entire sequence of 100 transactions — and this number could get much bigger! The trick here is that the information we keep about a sequence is wholly encoded in its state vector. If we keep a table in memory with the most recent state for each credit card, we need to carry out the following when a new transaction arrives:

- Fetch the current state for the given card number from memory.

- Feed the current transaction and the state we just fetched to a GRU cell, yielding the new state for this card number.

- Feed the new state to the final block to get a prediction.

- Update the state table with the new state for the given card.

In practice, the predictions are the same as what we’d see if we fed entire sequences every time we wanted to score a transaction. By keeping track of the states, we can expect the model to take a similar amount of time to score each transaction, regardless if it’s the first or the millionth one for that card. Memory-wise, we just need to keep a record of the most recent state per card.

Key takeaway

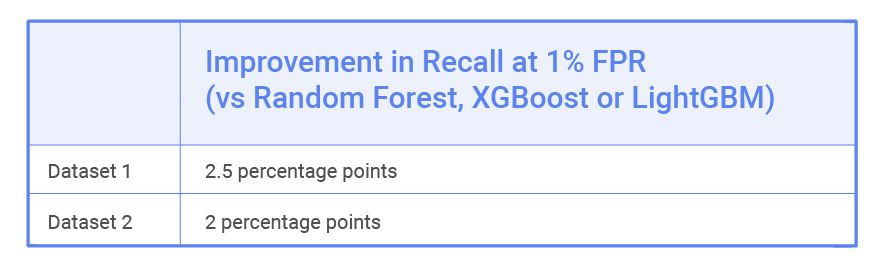

So far, in every case where we’ve tried using similar RNN models, our previous top-performing models (Random Forests, XGBoost, or LightGBM) have been outdone. The table below shows the improvements in transaction recall at a 1% false-positive rate — a common baseline metric — for two different datasets. In dataset 1, for example, this improvement would save an estimated $1 million in fraud per year. These models didn’t need much fine-tuning, so we expect that the results could even improve if hyperparameters were more thoroughly optimized.

The main takeaway here, though, is that the use of profiles or explicit feature engineering weren’t needed to obtain these results. Without profiles, this setup requires only a fraction of the memory typically needed to run in production.

Next steps for our deep learning adventure

Not everything is easier with this approach. It’s more challenging to explain model decisions than it is when you use Random Forests or Gradient Boosting Machines, for instance. Another point to note is the underlying assumption that we should consider sequences of transactions grouped by one entity. This works for use cases involving transaction monitoring for issuers, where we have access to the entire history of each card’s transactions and the card becomes the most important (and easiest) entity to profile; this may not work as well when our model benefits from profiles over a number of different entities, as is usually the case with transaction monitoring for online merchants.

We’ve already started tackling both these issues, so stay tuned for news sometime soon. However, overall, it’s exciting that this solution can achieve better results while completely side-stepping all hand-crafted features and profiles, which are costly in terms of human and computational resources.

Interested in reading the original post or other similar articles? Check out the Feedzai TechBlog, a compilation of tales on how we fight villains through data science, AI and engineering.

This work was done in collaboration with Mariana Almeida and Bernardo Branco.

Share this article:

Related Posts

0 Comments10 Minutes

Boost the ESG Social Pillar with Responsible AI

Tackling fraud and financial crime demands more than traditional methods; it requires the…

0 Comments8 Minutes

Enhancing AI Model Risk Governance with Feedzai

Artificial intelligence (AI) and machine learning are pivotal in helping banks and…

0 Comments14 Minutes

What Recent AI Regulation Proposals Get Right

In a groundbreaking development, 28 nations, led by the UK and joined by the US, EU, and…